Pose-to-Motion: Cross-Domain Motion Retargeting

with Pose Prior

-

Qingqing Zhao

Stanford University -

Peizhuo Li

ETH Zurich -

Wang Yifan

Stanford University -

Olga Sorkine-Hornung

ETH Zurich -

Gordon Wetzstein

Stanford University

Video

Abstract

Creating believable motions for various characters has long been a goal in computer graphics. Current learning-based motion synthesis methods depend on extensive motion datasets, which are often challenging, if not impossible, to obtain. On the other hand, pose data is more accessible, since static posed characters are easier to create and can even be extracted from images using recent advancements in computer vision. In this paper, we utilize this alternative data source and introduce a neural motion synthesis approach through retargeting. Our method generates plausible motions for characters that have only pose data by transferring motion from an existing motion capture dataset of another character, which can have drastically different skeletons. Our experiments show that our method effectively combines the motion features of the source character with the pose features of the target character, and performs robustly with small or noisy pose data sets, ranging from a few artist-created poses to noisy poses estimated directly from images. Additionally, a conducted user study indicated that a majority of participants found our retargeted motion to be more enjoyable to watch, more lifelike in appearance, and exhibiting fewer artifacts.

Overview

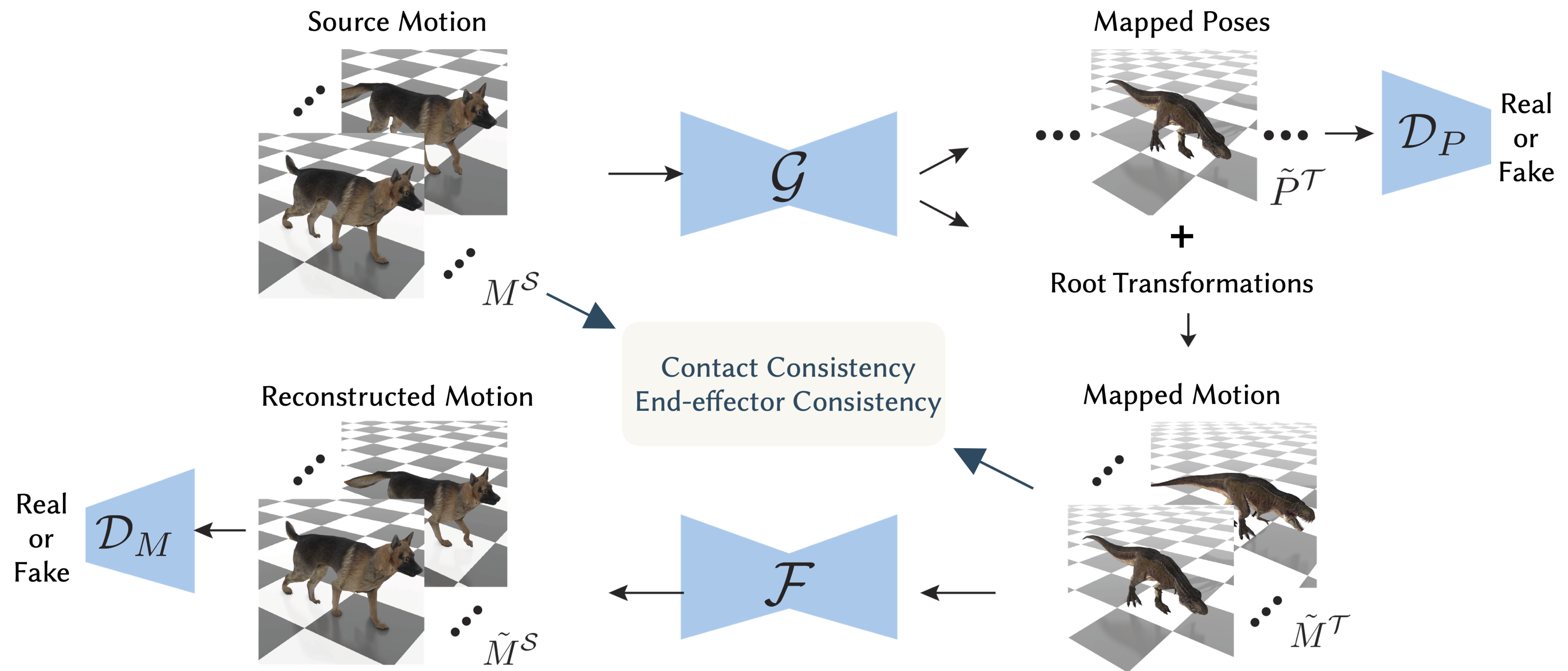

Our method builds on an asymmetric CycleGAN. The first half of the cycle maps a motion sequence from the source domain to a sequence of poses and root transformations in the target domain; the individual poses are compared against the pose dataset of the target domain using a pose discriminator. The other half of the cycle maps the sequence of poses and root transformations back to a motion sequence in the source domain, which is supervised with a reconstruction loss and an adversarial loss using a motion discriminator. The contact and end-effector consistency implicitly regulates the root prediction, leading to more realistic motion.

Abalation Study

Without considering motion prior knowledge may result in motion with excessive jitter and artifacts, such as foot sliding. Conversely, ignoring pose information, motion may appear smooth but poses can lack realism. Our approach aims to strike a balance, generating motion that is both smooth and characterized by realistic poses.

Additional Animation (through retargeting)

Download

Citation

@inproceedings{qzhao2024P2M,

title={Pose-to-Motion: Cross-Domain Motion Retargeting with Pose Prior},

author={Qingqing Zhao and Peizhuo Li and Wang Yifan and Olga Sorkine-Hornung and Gordon Wetzstein}

journal={SCA},

year={2024}

}

Acknowledgements

The website template was borrowed from Michaël Gharbi.